I’m building a new app on macOS. It’s the first time I’m building in Swift (and SwiftUI) and the latest Xcode (15.2). My app requires me to use UPnP to connect to a smart device on the network. I decided to use SwiftUpnp. However, I quickly ran into a problem. The library can’t start a HTTP listener, which is a prerequisite to starting service discovery. The error was a cryptic “Couldn’t start http server on port 0. The operation couldn’t be completed. (Swifter.SocketError error 2.)”. Copilot and ChatGPT only gave a generic answer regarding not using 0 as a port. Looking deeper into the code, SwiftUPnP tries to find a free port, falling back to 0 if it fails. Something has gone wrong since there are a lot of free ports on my machine. It uses Darwin.bind to test if a port is free, however the call is always returning -1, which I guess means the port is not free. Searching up and down the Google didn’t yield much useful information.

Is it because the app is not signed? I’ve been compiling the app as an unverified developer. I know that for iOS apps, your app needs to be signed with certain entitlements for greater access to device functionality. Do you now need to do the same for macOS?

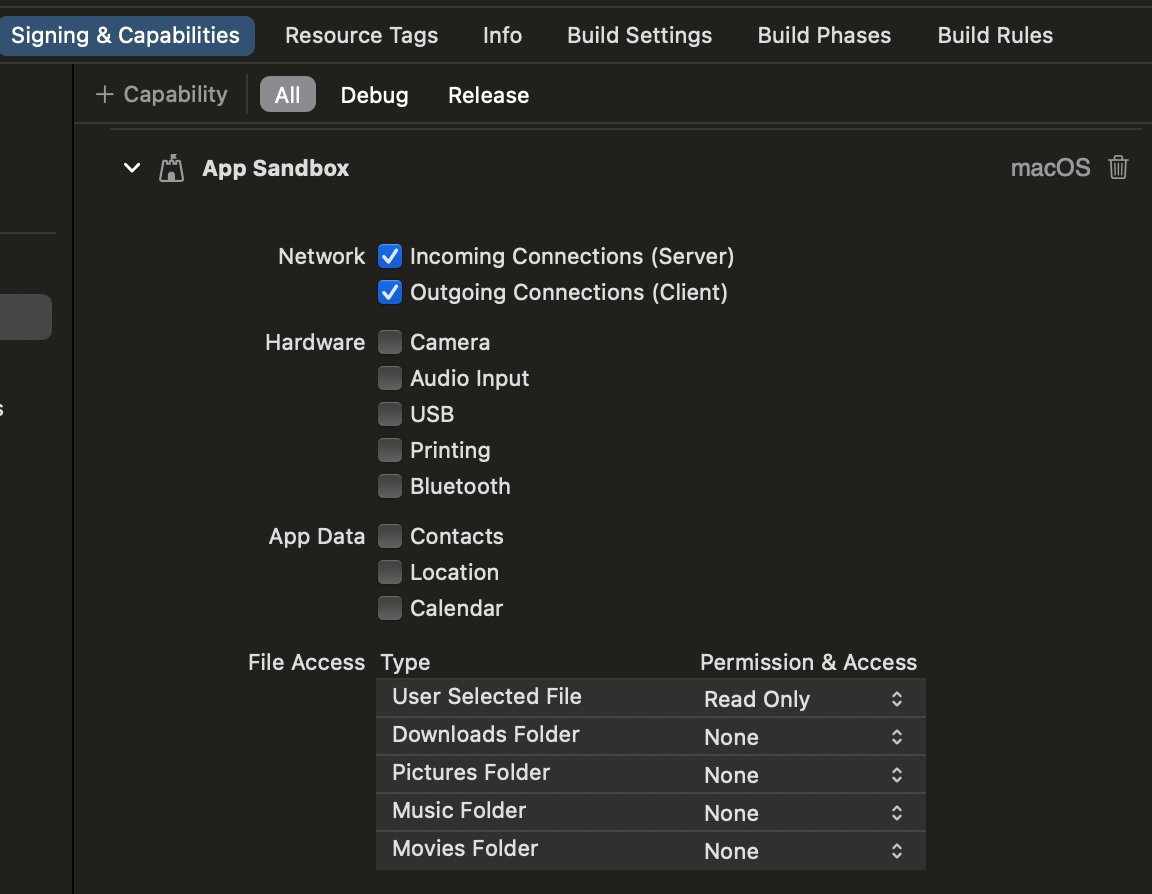

It turns out that there is now a bunch of Sandbox options for apps, which by default doesn’t let you perform incoming or outgoing connections.

Ticking those boxes solved this issue. Hopefully this will help someone in the future!